Data Pipeline Design Patterns

Data pipeline design patterns are the blueprint for constructing scalable, reliable, and efficient data processing workflows. These patterns provide a structured approach to solving common data pipeline challenges, such as handling large volumes of data, processing data in real-time, and ensuring data quality. By leveraging these design patterns, businesses can streamline their data operations, reduce latency, and maximize the value derived from their data assets.

We will explore the mechanics behind the most common frameworks, including batch processing, stream processing, lambda architecture, microservices-based patterns, and event-driven architectures. Each pattern offers unique advantages and is suited to particular types of data scenarios, from real-time analytics to high-throughput data processing. Understanding these patterns and their applications is crucial for anyone involved in the design and operation of data pipelines, offering insights into creating more effective and scalable data processing solutions.

What is Data pipeline architecture?

A data pipeline engineer will work on a daily basis to effectively design, implement, and manage architectures of data pipelines that should process flow from its origin to its destination dependably. These architectures of data pipelines ensure that the business process is executed by providing them with data right from ingestion up to deriving actionable insights. Below is a much deeper dive into what actually has to be the data pipeline architecture from hands-on in the field.

A data pipeline architecture simply shows the roadmap pointing out the strategy with which the data will be collected, processed, and eventually used within an organization. The process typically begins with the data ingestion stage that may implicate mechanisms on bringing in data from various sources: databases, file systems, or even live streaming data. All right, the processed data are then processed further in this step of data preparation — in essence, the transformation of the raw data to an improved form through a number of cleaning, validation, and aggregation tasks. “In fact, in these requisites is where one of the toughest challenges for an engineer lies — the processing needs to be efficient and, at the same time, scalable enough to fit the increasing requests from the data within the organization.”

The processed data is then moved to storage solutions, where it’s organized and made accessible for analysis. Modern data pipelines often leverage cloud storage and computing resources for this purpose, given their scalability and cost-effectiveness. Finally, the data is analyzed, visualized, or used to trigger specific business processes, delivering actionable insights that drive decision-making.

A critical aspect of designing an effective data pipeline architecture is selecting the right tools and technologies. These range from scripting data processing with programming languages such as Python, processing into big data frameworks like Apache Spark for large-scale data workloads, and deploying in cloud platforms such as AWS or Google Cloud for scalable storage and computing resources. The architecture must cover securing the data, compliance with data governance policies, and support for real-time processing of applications that have actual data response requirements.

In practice, the impact of well-designed data pipeline architecture is profound. For instance, in the financial sector, real-time data pipelines are crucial for fraud detection systems that monitor transaction data for suspicious activities. In the e-commerce space, batch processing pipelines crunch vast amounts of user interaction data overnight to generate personalized product recommendations for millions of users.

Common Data Pipeline Design Patterns

In modern data management, the design of a data pipeline architecture is vital for transforming raw data into valuable insights. It is a comprehensive plan that orchestrates the movement and processing of data through various stages in a system, ensuring it is efficiently transported, transformed, and made available for analysis. Let’s explore the common design patterns in data pipeline architecture, detailing each and offering real-world examples.

Batch Processing Pattern

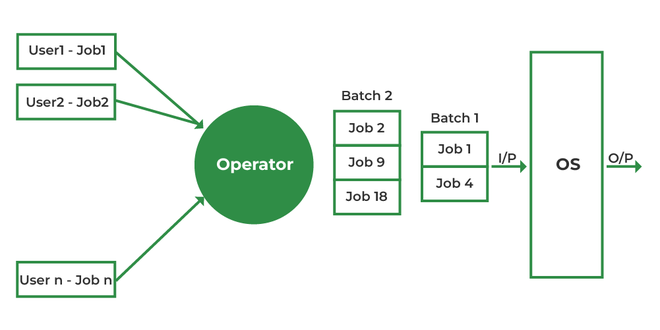

The Batch Processing Pattern is a classic design in data pipeline architecture, rooted in a time-honored approach to data management. It involves compiling and processing data in large, discrete sets or ‘batches’ rather than in a continuous stream. This pattern is especially effective when dealing with significant volumes of data that do not require instant analysis or real-time insights.

How it Works:

In batch processing, data is collected over a set period—this could be every hour, once a day, or even weekly — depending on the needs of the business and the nature of the tasks at hand. Once the data has been gathered, it is processed in a single, extensive operation. This methodical approach can involve various tasks such as data cleaning, transformation, aggregation, and the subsequent loading of the processed data into databases or data warehouses.

Advantages:

One of the key advantages of the batch processing pattern is its simplicity and reliability. Since the processing happens in distinct intervals, it’s often easier to manage and troubleshoot. Batch jobs can be scheduled during off-peak hours, making efficient use of system resources and often resulting in cost savings, particularly when using cloud-based services that charge based on load.

Batch processing is also inherently scalable. As the volume of data grows, the system can adjust by increasing the size of the batches or the frequency of the processing. Furthermore, it often involves fewer complexities related to real-time data consistency checks and concurrency issues, as the data is not changing during the batch processing window.

Real-World Example:

Banks collect transactions made by all customers during the day. Overnight, these transactions are batch processed to update account balances, interest accruals, and to generate statements and reports. Such processing does not need to occur in real-time and can benefit from the focused use of resources during non-operational banking hours.

Another example is in the field of genomics, where research labs perform batch processing of DNA sequencing data. This data is often processed in large batches as it is not time-sensitive and requires extensive computational resources for tasks like sequence alignment and variant calling.

Tools and Technologies:

The tools and technologies commonly associated with batch processing reflect its need for robustness and scalability. Traditional databases and file systems often serve as data sources. For the processing itself, Apache Hadoop has been a long-standing choice due to its ability to handle large-scale data across distributed systems. Other tools include Apache Spark, which can perform batch processing more rapidly than Hadoop’s MapReduce, and cloud services like Amazon S3 and Google Cloud Storage for storing the large volumes of data that are typical in batch processing scenarios.

Stream Processing Pattern

The Stream Processing Pattern is a modern data pipeline design pattern tailored for scenarios that require immediate insights and actions from incoming data. It’s distinct from batch processing in that data is continuously ingested and processed in near real-time, rather than being accumulated and processed at intervals. This approach is critical for use cases where even the slightest delay in data processing could be detrimental, such as in financial trading, real-time analytics, and monitoring systems.

How it Works:

Stream processing involves breaking down data into small, manageable chunks, often referred to as records or events, which are processed sequentially and incrementally as they arrive. This allows for the instantaneous transformation, enrichment, and analysis of data, enabling immediate decision-making. Stream processing systems typically require a way to handle time-sensitive operations, managing out-of-order data, and providing mechanisms for state management and windowing — grouping data based on time or other attributes.

Advantages:

One of the main advantages of stream processing is its ability to handle high-throughput data with low latency, delivering insights almost as soon as the data is generated. This pattern also enhances the agility of data-driven applications, allowing them to adapt to new information quickly. Stream processing supports complex event processing (CEP), enabling the correlation of events over time to detect patterns and anomalies.

Real-World Example:

An illustrative example of stream processing in action is seen in online fraud detection systems. Here, a stream processing pipeline analyzes transaction data in real-time, checking for unusual patterns or anomalies that could indicate fraudulent activity. If a potential fraud is detected, alerts are triggered instantaneously to mitigate any potential loss.

In the realm of IoT, smart cities employ stream processing to monitor traffic flow through sensors. Data collected from these sensors is processed on the fly to adjust traffic signals in real-time, alleviating congestion and reducing the likelihood of accidents.

Tools and Technologies:

Tools commonly associated with the Stream Processing Pattern include Apache Kafka, which acts as a distributed streaming platform, and Apache Flink or Storm, both of which are stream processing frameworks capable of handling complex processing pipelines with high reliability and at scale. Cloud services such as Amazon Kinesis provide managed environments to run stream processing workloads with integrations to other cloud services for storage and analytics.

Lambda Architecture Pattern

The Lambda Architecture Pattern is a hybrid data processing model that combines the strengths of both batch and stream processing techniques to handle massive datasets with a balance of latency and throughput. It’s designed to provide a robust system that can produce both accurate and real-time analytics.

How it Works:

Lambda Architecture is built on three layers: the batch layer, the serving layer, and the speed layer.

The batch layer handles the large-scale historical data analysis. It’s responsible for the immutable, comprehensive raw data set and pre-computes the batch views.

The serving layer indexes the batch views so that they can be queried in a low-latency, ad-hoc way. This layer is essentially the database that supports batch updates and random reads.

The speed layer compensates for the high latency of batch processing by analyzing real-time streaming data. This layer aims to view data that is not covered by the batch layer due to the processing lag.

Advantages:

Lambda Architecture’s main advantage is its ability to balance latency and throughput while providing a fault-tolerant system that can scale with data volume. By maintaining two paths for data processing, it ensures that real-time analytics are not hampered by delays in batch processing and that all incoming data contributes to the overall analytical insights.

Real-World Example:

A common application of Lambda Architecture is seen in the context of e-commerce platforms, where it’s essential to have up-to-date recommendations for users based on their activities. The batch layer processes all user activity up to a certain point to provide a comprehensive recommendation model, while the speed layer updates this model in real-time with the latest user actions, such as clicks, searches, or purchases.

Tools and Technologies:

The tools that facilitate Lambda Architecture are diverse, often involving a combination of technologies like Apache Hadoop for batch processing, Apache Storm or Spark for real-time stream processing, and NoSQL databases like Apache Cassandra for the serving layer. Cloud services such as Amazon Web Services (AWS) Lambda can also be part of the speed layer, processing events and data in real-time.

Microservices-based Pattern

The Microservices-based Pattern in data pipeline architecture refers to a design where the pipeline is composed of a collection of small, independent, and loosely coupled services. Each microservice is designed to perform a specific function or process a particular type of data, and together, they create a flexible, scalable, and resilient system.

How it Works:

In this pattern, each microservice is responsible for a discrete portion of the data processing task and operates as a separate entity. Communication between services typically happens over a lightweight mechanism such as HTTP REST or message queuing. Data flows from one service to another through these communication channels, often with each service transforming or enriching the data before passing it along.

Advantages:

The microservices-based pattern promotes a highly scalable and fault-tolerant system. If one microservice fails, it does not bring down the entire pipeline, allowing for more graceful error handling and recovery. This pattern also allows for easier updates and maintenance since each service can be updated independently without affecting the entire system. Moreover, it facilitates the scaling of individual components in response to varying loads, contributing to efficient resource utilization.

Real-World Example:

In the realm of online streaming services, such as Netflix, a microservices-based data pipeline is critical. Different microservices are responsible for various tasks like user authentication, video transcoding, content delivery, and usage analytics. This approach allows Netflix to update its algorithms, add new features, and scale specific aspects of its service without disrupting the entire platform.

See below for a SQL interview question from Netflix:

List Top 10 most watched titles by each Country based on the watched time. Only List Out “United States”, “Canada”, and “Egypt”

Output should be Country, Title, Unique_Users_Watched, Total_Time_Spent_Hours. Sort by unique users count in reverse alphabetical order

| video_id | decd6ac7 |

| type | Movie |

| title | Dick Johnson Is Dead |

| duration | 90 min |

| rating | PG-13 |

| country | United States |

| user_id | 44736d |

| name | Aaingini |

| city | New Orleans |

| country | United States |

| [email protected] | |

| created_at | 10/17/19 9:21 |

| last_login_at | 12/21/20 2:09 |

| view_id | a805527 |

| user_id | 11d775 |

| video_id | 852beff4 |

| device | Unknown |

| start_time | 11/8/22 18:13 |

| end_time | 11/9/22 0:17 |

Interested in the solution? See Here!

Tools and Technologies:

Microservices in data pipelines are often containerized using technologies like Docker, which packages services and their dependencies into standardized units for software development. Orchestration tools such as Kubernetes help manage these containers, ensuring they are correctly deployed, scaled, and maintained. For communication, microservices might use message brokers like RabbitMQ or Kafka, which facilitate data flow between services without direct linkages, enhancing decoupling and resilience.

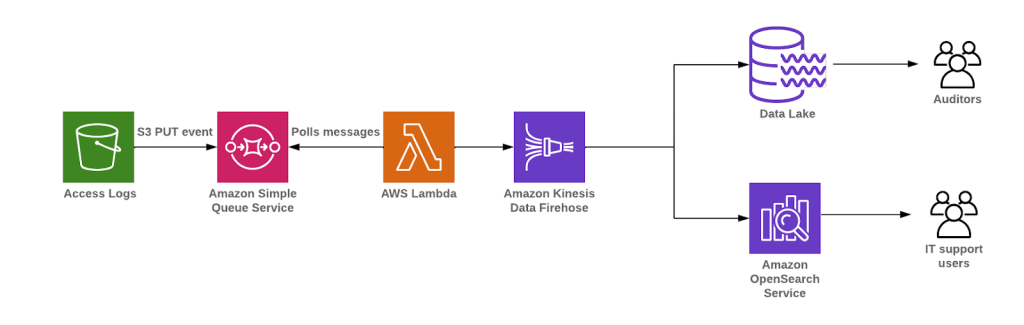

Event-driven Architecture Pattern

The Event-driven Architecture Pattern is a dynamic and responsive approach to designing data pipelines, particularly effective for systems that operate on real-time data and actions. This pattern centers around the detection, consumption, and reaction to events—significant changes in state or notable occurrences within a system.

How It Works:

In an event-driven architecture, components, often referred to as services, are loosely coupled and communicate asynchronously by emitting and listening for events. An event producer, such as a user action or system process, generates an event and publishes it. This event is then detected by an event processor or broker, which routes it to the appropriate service or consumer that reacts to the event, often by triggering a specific process or workflow.

Advantages:

Event-driven architectures are inherently scalable and adaptable. By decoupling the services, it allows for independent scaling and updating, which is essential in environments where different components may have varied load profiles and scaling requirements. Additionally, this pattern enhances system resilience and can lead to more responsive and maintainable systems, as it’s easy to add or modify functionalities without impacting other parts of the system.

Real-World Example:

A classic real-world application of the event-driven pattern is seen in online retail platforms. When a customer places an order, this action creates an event that triggers various services within the platform, such as updating the inventory service, notifying the shipping service, and sending order confirmation to the customer. Each of these services responds to the event independently, carrying out their respective tasks in a coordinated manner.

Another example is in stock trading platforms, where an event-driven system can monitor stock prices in real-time. When a stock hits a certain threshold, an event is emitted, prompting immediate action, such as the execution of a trade or notification to traders.

Tools and Technologies:

Event-driven architectures are facilitated by tools that enable event notification and message passing, such as Apache Kafka, RabbitMQ, or AWS SNS (Simple Notification Service) and SQS (Simple Queue Service). These tools ensure that events are delivered reliably and can be processed in order or concurrently, depending on the system’s requirements.

Selecting the Right Design Pattern

Selecting the right design pattern for your data pipeline is a decision that significantly impacts the functionality of your data processing capabilities. This decision should be guided by a clear understanding of your data’s nature, the requirements of the business processes it supports, and the specific challenges you expect to face.

Here’s how you can navigate the decision-making process:

- Understand your data characteristics

Begin by assessing the volume, velocity, variety, and veracity of your data—often referred to as the four Vs of big data. Large volumes of historical data may lean towards batch processing, whereas high-velocity data that streams in real-time will likely require stream processing or a more reactive approach like event-driven architecture.

- Identify your processing needs

Consider whether your business requires real-time analytics, which is crucial in industries like finance or telecommunications. If your focus is more on data analysis for trends, batch processing might suffice. For use cases that require both, such as personalized user experience on a digital platform, the lambda architecture could be the best fit.

- Evaluate System Complexity and Scalability

Microservices-based and event-driven architectures offer high scalability and are suitable for complex systems with many moving parts. However, they also add complexity in terms of deployment and management. Assess whether your organization has the expertise and resources to manage such architectures.

- Consider Data Accuracy

If your business decisions rely on the freshest data, prioritize stream processing or event-driven architectures that process data as it arrives. If accuracy over time is more critical and your operations can tolerate some delay, then batch or lambda architectures may be appropriate.

- Regulatory and Compliance Requirements

Some industries have stringent data handling and processing regulations. Ensure that the chosen pattern meets these requirements, especially when it comes to data retention, processing, and auditing.

- Review Existing Infrastructure

Look at your current infrastructure to determine if it supports the implementation of the chosen pattern. For instance, adopting a microservices-based pattern might require a container orchestration system like Kubernetes, while event-driven architecture could necessitate a robust messaging system like Apache Kafka.

- Anticipate Future Evolution

Choose a design pattern that not only meets current needs but can also accommodate future changes in data strategy and business objectives. Consider patterns that allow for iterative development and flexibility in integration.

Before committing to a specific pattern, prototype solutions based on different patterns and conduct performance and stress tests. This will help you gather empirical data on how well each pattern suits your needs and will support a more informed decision.

FAQ

Q: How does batch processing differ from stream processing?

A: Batch processing involves processing data in large, discrete chunks at regular intervals. In contrast, stream processing handles data continuously and incrementally as it’s generated, providing real-time or near-real-time processing capabilities.

Q: Is the Lambda Architecture still relevant with the advent of real-time processing?

A: Yes, the Lambda Architecture remains relevant as it provides a comprehensive approach by combining the thoroughness of batch processing with the immediacy of stream processing, making it suitable for scenarios that require both accurate historical analysis and real-time data processing.

Q: What are the main challenges of implementing a Microservices-based Pattern in data pipelines?

A: Implementing a Microservices-based Pattern can introduce challenges related to service coordination, data consistency, and the complexity of managing multiple independent services. However, it also provides flexibility, scalability, and ease of updates and maintenance.

Q: When should I consider an Event-driven Architecture Pattern for my data pipeline?

A: Consider an Event-driven Architecture Pattern if your system demands high responsiveness to data events, requires decoupled components, and you want to build a scalable system that reacts to changes or specific conditions in real-time.

Q: How do I know if my organization is ready for a complex data pipeline architecture like Microservices or Event-driven?

A: Evaluate your team’s technical expertise, your current infrastructure’s capabilities, and whether the benefits of implementing such a complex system — like scalability and flexibility — align with your long-term business goals.

Q: Can data pipeline design patterns impact the cost of my data operations?

A: Yes, the choice of design pattern can significantly impact operational costs. For example, stream processing may incur higher costs due to the need for resources to handle continuous data flow, whereas batch processing can be more cost-effective by running during off-peak hours.

Conclusion

In closing, embarking on a career in data engineering can be a transformative journey, one that’s within your reach even if you’re starting from scratch. With dedication, the right guidance, and hands-on practice, you can learn how to code and navigate the landscape of data engineering in as little as three months.

Data Engineer Academy offers a structured pathway, tailored by industry professionals, to equip you with the necessary skills and practical experience. Through a personalized plan that adapts to your learning pace and style, we’re committed to not only educating but also to helping you build real-life projects that demonstrate your end-to-end understanding of architectural and cloud solutions.

Book a call with us to start crafting your success story, supported by our job guarantee. You’re not just gaining education; you’re launching a career. Join the ranks of our successful graduates — your future as a data engineer awaits. Book a call and start your journey today.