Data Lake Essentials: Architecture and Optimization Guide for Modern Data Engineers

Data lakes are becoming an essential part of modern data architecture, especially as organizations grapple with the ever-growing volumes of raw, unstructured data. A data lake serves as a centralized repository where you can store data in its native format—no need for predefined structures. This flexibility makes it perfect for big data storage and advanced analytics, allowing organizations to unlock insights from all kinds of data sources. To truly harness the power of a data lake, it’s crucial to understand its architecture, including components like ingestion layers, storage, and processing frameworks.

But here’s the thing—maintaining an efficient and optimized data lake isn’t without its challenges. Issues like data duplication, lack of governance, and performance bottlenecks can derail your efforts if not addressed strategically. By exploring foundational data engineering best practices and key optimization techniques, you can ensure your data lake remains a powerful, scalable tool for your team.

Core Concepts of a Data Lake

Data lakes are at the heart of many modern data strategies, empowering organizations to store and analyze data without the constraints of traditional systems. Unlike structured data repositories, a data lake is designed to manage all types of data—structured, semi-structured, and unstructured—at any scale, delivering unmatched flexibility for advanced analytics and machine learning.

Definition and Characteristics

At its core, a data lake is a centralized storage repository that enables you to keep data in its raw, unaltered format. Think of it as an open playground where data scientists, analysts, and engineers can explore information freely without predefined rules or schemas. This makes it a “schema-on-read” system, ideal for dynamic queries and experiments where the data’s structure can be applied only when it’s actively analyzed.

Scalability is another defining feature of data lakes. Built on distributed systems, data lakes can accommodate vast amounts of information, from daily business transactions to complex machine-generated logs. The ability to handle diverse data formats, from JSON and XML to visual and audio data, ensures that organizations aren’t locked into limited analytical opportunities. For an in-depth comparison with data warehouses, check out Data Lakes and Data Warehouses.

Data Lake vs Data Warehouse: Key Differences

Data lakes and data warehouses may sound like similar tools, but they serve different purposes and differ significantly in design and capabilities. To put it simply, data lakes store raw, unprocessed data, while data warehouses store structured, processed data ready for reporting and visualization. Data warehouses often enforce strict schemas, which means flexibility is traded for reliability and efficiency.

Flexibility is where data lakes shine. Need to add a new type of data? No problem. A lake adapts effortlessly because there are no limitations on structure. This contrasts sharply with data warehouses, where adding new data types often involves schema adjustments that can slow things down. Data lakes also tend to be more cost-effective since they use scalable, low-cost storage options, making them a great solution for vast datasets.

However, with greater flexibility and lower costs come unique challenges such as governance and performance tuning. Managing these challenges ensures that your data lake functions efficiently without getting swamped by “data swamps.” For further exploration, consider reviewing Choosing Between a Data Warehouse and a Data Lake and even external perspectives like Amazon’s Data Lake vs Data Warehouse vs Data Mart for a rounded understanding.

By weighing the trade-offs between these two systems, you can decide which is better suited to your needs, whether you’re tackling data analysis, business intelligence, or both.

Building Blocks of Data Lake Architecture

Understanding the architecture behind a data lake is key to creating a system that can handle vast amounts of raw and unstructured data effectively. A well-constructed data lake functions much like a vast, precisely managed ecosystem, where various components work in harmony to ensure seamless data storage, processing, and analysis. Below, we’ll dissect the essential building blocks starting from where data rests to how it is managed, governed, and kept secure.

Storage Layer

At the heart of a data lake is its storage layer, the foundational element that determines how effectively you can manage vast and diverse data types. Data lakes rely on distributed storage platforms like Hadoop Distributed File System (HDFS) or object-based storage systems such as Amazon S3. This architecture ensures limitless scalability, allowing you to scale your storage needs as your data volume grows.

Security is another cornerstone of the storage layer. With sensitive information potentially residing in raw datasets, implementing access controls and encryption methods is non-negotiable. Whether it’s regulatory compliance or protecting intellectual property, ensuring storage security safeguards your data’s integrity.

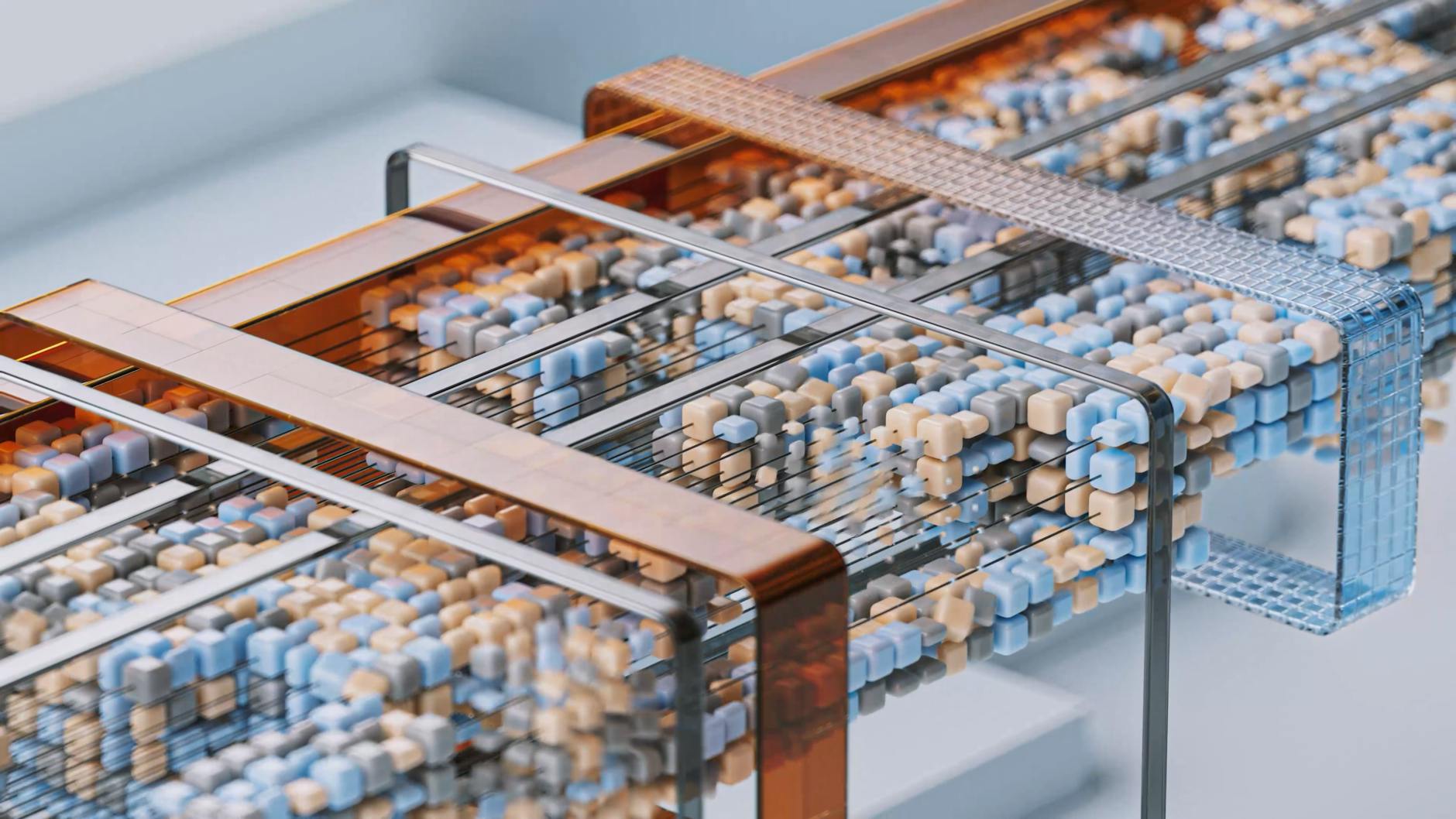

Photo by Google DeepMind

Data Ingestion and Processing

The raw power of a data lake lies in its ability to absorb various data types—from structured logs and spreadsheets to unstructured social media feeds or IoT data—at scale. Data ingestion tools such as Apache Kafka, AWS Glue, or Azure Data Factory are often employed to feed diverse data seamlessly into the lake. These tools not only manage real-time streaming but also handle batch processing, enabling you to balance flexibility with performance.

Once ingested, processing this data becomes the priority. Processing engines like Apache Spark or Flink can be utilized to prepare the data for analysis. If you’re new to data ingestion tooling, explore Data Ingestion: Methods and Tools — A Comprehensive Guide for an in-depth overview of the best tools available.

Metadata Management

Raw data without context is like an uncharted map—essentially useless. Metadata management is the GPS of a data lake, creating a searchable index that enables users to make sense of vast pools of information. Managing metadata effectively allows your team to locate, classify, and retrieve data efficiently, reducing redundancy and improving analytics workflows.

Metadata tools like AWS Glue Data Catalog or Apache Atlas help automate this process. They don’t just store metadata; they enrich it with annotations and lineage tracking, making it easier to track data origins and transformations.

For organizations prioritizing analytics, metadata also enhances query performance. By mapping data locations and formats, your data lake becomes an organized repository rather than a chaotic data “swamp.”

Data Governance and Security

A data lake without governance is a liability waiting to happen. Building robust governance policies ensures that your data lake operates within regulatory frameworks while maintaining transparency. This includes setting role-based access controls (RBAC), establishing data retention policies, and maintaining an audit trail.

For sensitive data, encryption is absolutely critical. Encrypting data both at rest (in storage) and in transit (during transfers) ensures that it remains protected against unauthorized access. Additionally, identity platforms like Azure Active Directory or Okta can be integrated to enforce stronger authentication measures.

Compliance with data protection regulations such as GDPR or CCPA can be systematically maintained with tools that monitor and report access patterns, bolstering accountability across your organization. For more on architectures and compliance strategies in cloud data systems, you could find applicable information in AWS vs Azure Data Engineering: Which is More in Demand?.

Strategies for Optimizing a Data Lake

Optimizing a data lake isn’t just about improving performance—it’s about creating a system that integrates seamlessly into your data strategy while being cost-effective and scalable. With the right techniques, you can ensure your data lake supports the needs of data engineers, analysts, and scientists alike. Let’s explore practical strategies in partitioning, managing costs, and boosting performance.

Partitioning and Indexing

Partitioning and indexing are critical methods to enhance data retrieval speeds and ensure efficient query performance in a data lake. Partitioning organizes data into smaller, easily readable chunks, improving access time. It’s like dividing a giant library into sections by topic—searching becomes much more manageable. Implementing partitioning at regular intervals, such as by time or region, ensures data querying remains efficient even as volume grows.

Once partitioning is in place, indexing acts as a navigation tool to expedite searching even further. Indexes are structured like the index of a book, pointing you to the exact location of needed information. Tools like Apache Hive partitioning or integrating indexes through solutions like AWS Glue are excellent for achieving this layer of optimization.

For additional insights on implementing sophisticated techniques like data partitioning and indexing, explore Advanced Data Modeling Techniques.

Cost Management

Cost can spiral out of control if not monitored, especially with data lakes. Implementing tiered storage is one effective approach to manage expenses. With tiered storage, you allocate frequently accessed data to fast, performance-optimized storage tiers, and less critical information to slower, cheaper tiers. This strategy mirrors the concept of a closet—keeping daily essentials within reach while storing seasonal items in the attic.

Lifecycle policies also play a vital role in controlling costs. By automating data deletion or transitioning data to “colder” storage tiers when specific conditions are met, lifecycle policies prevent unnecessary spending on outdated or seldom-used information. Cloud platforms like AWS or Azure typically offer lifecycle management solutions tailored for data lakes.

For a range of excellent practices that can further help you rein in data lake costs, this resource is a must-read.

Photo by Lukas

Photo by Lukas

Performance Improvements

Data lake performance is the foundation of your entire analytics pipeline. Without optimization, slow queries and retrieval times can hinder productivity. Utilizing tools and frameworks like Apache Spark allows for faster querying, while enabling columnar storage formats (e.g., Parquet or ORC) significantly accelerates read times.

Another essential strategy is monitoring and fine-tuning your setup. From workload management to cluster provisioning, continuous oversight ensures the system aligns with performance goals. Technological advancements in cloud platforms like Azure can also simplify scalability and resource management. The Challenge of Azure Data Management provides actionable guidance for refining and optimizing Azure-based data lakes.

If done correctly, these performance tweaks transform your data lake from a sluggish storage bin into a vital engine driving insights and innovation. For additional tips, this article on Monitoring and Optimizing Data Lake Environments is worth exploring.

In the constantly evolving landscape of big data management, adopting strategies like these ensures your data lake remains an adaptive and efficient resource.

Real stories of student success

Student TRIPLES Salary with Data Engineer Academy

DEA Testimonial – A Client’s Success Story at Data Engineer Academy

Frequently asked questions

Haven’t found what you’re looking for? Contact us at [email protected] — we’re here to help.

What is the Data Engineering Academy?

Data Engineering Academy is created by FAANG data engineers with decades of experience in hiring, managing, and training data engineers at FAANG companies. We know that it can be overwhelming to follow advice from reddit, google, or online certificates, so we’ve condensed everything that you need to learn data engineering while ALSO studying for the DE interview.

What is the curriculum like?

We understand technology is always changing, so learning the fundamentals is the way to go. You will have many interview questions in SQL, Python Algo and Python Dataframes (Pandas). From there, you will also have real life Data modeling and System Design questions. Finally, you will have real world AWS projects where you will get exposure to 30+ tools that are relevant to today’s industry. See here for further details on curriculum

How is DE Academy different from other courses?

DE Academy is not a traditional course, but rather emphasizes practical, hands-on learning experiences. The curriculum of DE Academy is developed in collaboration with industry experts and professionals. We know how to start your data engineering journey while ALSO studying for the job interview. We know it’s best to learn from real world projects that take weeks to complete instead of spending years with masters, certificates, etc.

Do you offer any 1-1 help?

Yes, we provide personal guidance, resume review, negotiation help and much more to go along with your data engineering training to get you to your next goal. If interested, reach out to [email protected]

Does Data Engineering Academy offer certification upon completion?

Yes! But only for our private clients and not for the digital package as our certificate holds value when companies see it on your resume.

What is the best way to learn data engineering?

The best way is to learn from the best data engineering courses while also studying for the data engineer interview.

Is it hard to become a data engineer?

Any transition in life has its challenges, but taking a data engineer online course is easier with the proper guidance from our FAANG coaches.

What are the job prospects for data engineers?

The data engineer job role is growing rapidly, as can be seen by google trends, with an entry level data engineer earning well over the 6-figure mark.

What are some common data engineer interview questions?

SQL and data modeling are the most common, but learning how to ace the SQL portion of the data engineer interview is just as important as learning SQL itself.