Understanding Data Warehousing: Key Concepts and Insights from Expert Guest Lectures [2025]

Data warehousing is a crucial component in managing and analyzing large sets of data. But what’s the real significance of data warehousing? Simply put, it allows organizations to consolidate data from various sources, enabling efficient reporting and analysis. For data engineers, understanding data warehousing is essential, as it directly impacts system design, performance, and decision-making.

In this article, we’ll explore core concepts surrounding data warehousing and showcase insightful guest lectures that dive into the specifics of designing, implementing, and optimizing these systems. From learning about the differences between a data warehouse and a data lake to mastering the intricacies of data management, you’ll discover valuable strategies that can enhance your skill set. So if you’re looking to deepen your knowledge in this area, you’re in the right place.

To start your journey, check out our detailed resource on Choosing Between a Data Warehouse and a Data Lake and explore the Expert Guest Lectures on Data Engineering & AI Trends. You’ll gain insights that can make a real difference in how you approach data warehousing challenges.

What is Data Warehousing?

Data warehousing is a critical approach in the world of data management, primarily designed for the effective storage and analysis of large volumes of structured data. It serves as a central repository where data from various sources is consolidated. This not only simplifies reporting but also provides a more comprehensive view of organizational performance. It’s essential for data engineers to grasp the intricacies of data warehousing, as it plays a significant role in system design and actionable insights. For more on the differences between data lakes and data warehouses, check out our article on Data Lakes and Data Warehouses.

The History of Data Warehousing

The concept of data warehousing started emerging in the late 1980s when businesses recognized the need for better data management solutions. It originated as a way to gather data from disparate sources, evolving into what we now know as a system specifically optimized for data analysis and reporting. The development of OLAP (Online Analytical Processing) tools in the 1990s propelled this field forward, enabling complex queries and interactive data analysis.

Advancements in technology, particularly in cloud computing and big data, have further transformed data warehousing. These changes have allowed for more scalable, flexible, and efficient storage solutions. Today, organizations utilize data warehousing for real-time analytics, driving data-driven decision-making processes and strategies.

Core Components of a Data Warehouse

Understanding the architecture of a data warehouse is crucial for anyone looking to design or manage these systems. At its core, a data warehouse consists of several essential components. First, there’s the ETL (Extract, Transform, Load) process, which plays a pivotal role in the integration of data. For more details on this, check ETL vs ELT: Key Differences, Comparison.

Another vital element is data marts, which are smaller, more focused versions of a data warehouse tailored for specific departments or business lines. Lastly, OLAP systems facilitate the quick analysis of data, supporting complex calculations and extensive queries. These components work together to create a robust infrastructure that allows organizations to derive meaningful insights from their data, fueling better business strategies and outcomes.

Designing an Effective Data Warehouse

Designing an efficient data warehouse requires a strategic approach that focuses on schema types and data modeling techniques. Each choice you make can significantly impact the performance of your data warehouse and, ultimately, the quality of data analysis. Let’s break down these aspects to understand their importance in creating a robust system.

Understanding Schema Types

When it comes to data warehousing, schema design plays a critical role in how data is organized and queried. The two primary schema types are star and snowflake schemas. A star schema consists of a central fact table surrounded by dimension tables. This structure is straightforward, making it faster for query execution. It’s often used for systems that require quick access to data and simpler queries. If your analysis involves straightforward data retrieval, such as sales reporting or product performance metrics, the star schema is a solid choice.

On the other hand, the snowflake schema takes the star schema a step further by normalizing the data within the dimension tables. This means that dimensions are split into additional tables, reducing redundancy but increasing complexity. It’s ideal for scenarios where detailed data relationships are crucial and the queries are more complex. However, the snowflake schema can result in slower query performance due to the additional joins required. Ultimately, selecting between star and snowflake schemas depends on your specific reporting needs and the trade-offs you’re willing to make regarding performance and complexity.

Data Modeling Techniques

Data modeling techniques are essential in the data warehousing process, as they lay the foundation for how data will be structured, stored, and accessed. Understanding the different methodologies will help you create an effective data model tailored to your business’s needs. Often, techniques like conceptual, logical, and physical data modeling are employed. Conceptual modeling focuses on high-level relationships without delving into technical specifics, making it easier for stakeholders to grasp the overall framework. Logical modeling dives deeper, outlining the structure that dictates how data is interconnected. The physical modeling phase translates these ideas into a tangible schema that will reside within your database.

To facilitate this modeling process, several tools are available, including ER/Studio, Lucidchart, and Microsoft Visio. These tools help visualize and document the relationships between data entities, assisting teams in collaborating and refining their models efficiently. Investing time in proper data modeling not only enhances data integrity but also simplifies future updates and expansions of your data warehouse.

Moreover, if you want to explore best practices for designing a data warehouse, check out the article on Best Practices for Designing a Scalable Data Warehouse. This will provide you with practical insights that reinforce the concepts discussed here, preparing you for real-world challenges.

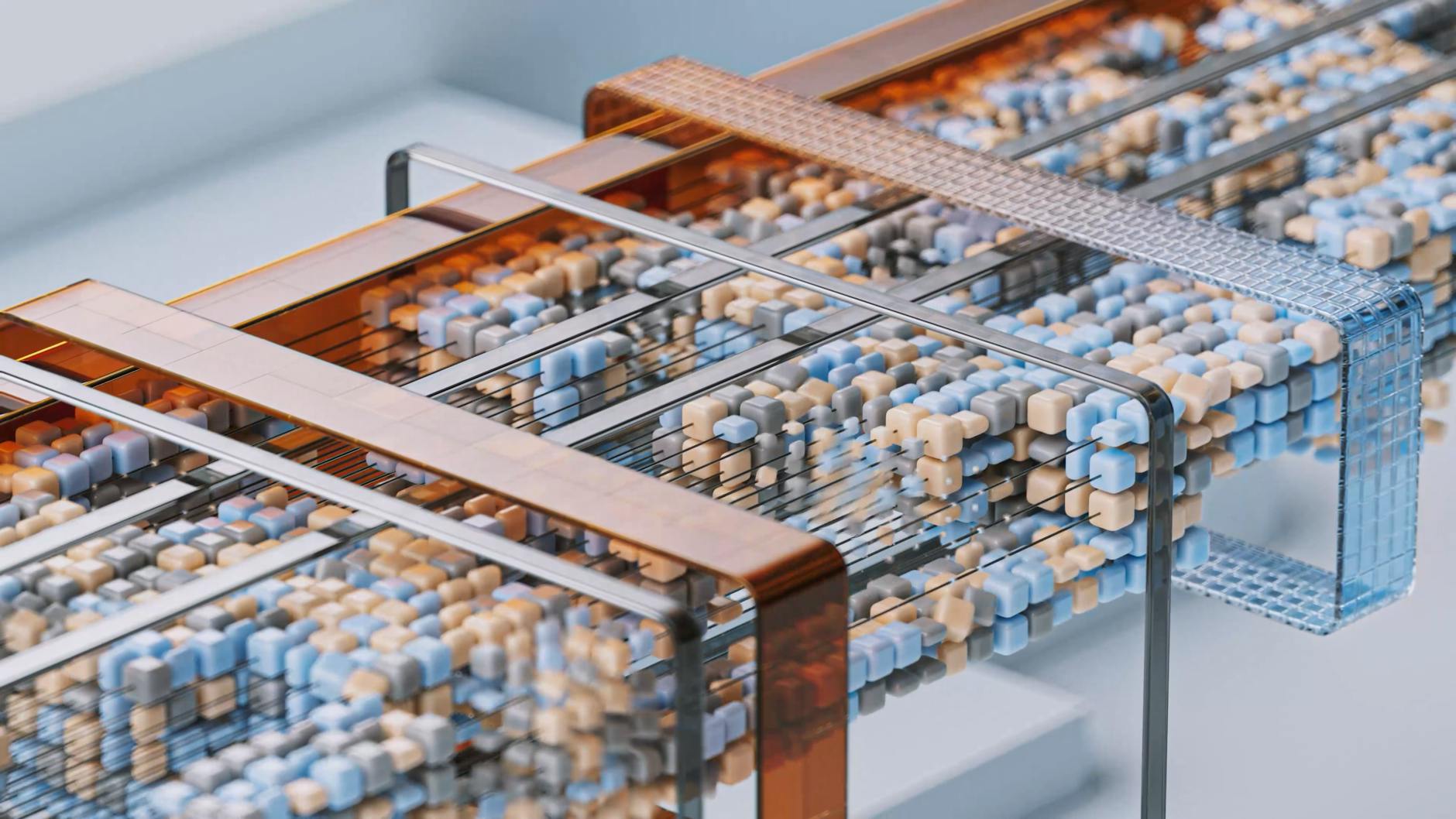

Photo by Google DeepMind

Learning from Expert Guest Lectures

Guest lectures offer a unique opportunity for anyone diving into the nitty-gritty of data warehousing. These sessions are often rich in insights that can help you grasp complex concepts in a more relatable way. Listening to experts shape your understanding and guide you through the latest trends, practical applications, and strategic approaches for effective data warehouse design and implementation. Let’s explore some of the valuable insights shared in recent guest lectures.

Data Warehousing Trends Discussed in Guest Lectures

In numerous guest lectures, experts highlight significant trends shaping the future of data warehousing. One interesting trend is the shift towards cloud-based systems, which enables easier scalability and reduced operational costs. Many organizations are transitioning from traditional on-premise data warehouses to cloud solutions due to benefits like real-time data processing and enhanced accessibility.

It’s also noteworthy that artificial intelligence (AI) and machine learning (ML) are increasingly integrated into data warehousing strategies. These technologies automate data management processes, enhancing data quality and allowing organizations to extract deeper insights more efficiently. In guest lectures, experts often discuss how predictive analytics is becoming a standard feature, enabling businesses to anticipate trends and make data-driven decisions.

Another trend gaining traction is the emphasis on data governance and security. With the rise of regulations like GDPR, guest speakers stress the importance of maintaining compliance while ensuring the integrity and security of data stored within warehouses. This focus on governance is expected to continue as organizations become more aware of the risks associated with improper data management.

For further insight into these topics, check The Role of Data Engineering in Building Large-Scale AI Models, which outlines how data engineering intersects with dynamic data warehousing practices.

Real-World Case Studies from Guest Speakers

Real-world applications of data warehousing come to life through engaging case studies presented by guest speakers. These stories not only illustrate the challenges faced by organizations but also showcase innovative strategies implemented to overcome them. One speaker might recount how a retail enterprise harnessed a data warehouse to optimize inventory management, cutting down costs significantly and improving customer satisfaction.

Another enlightening case study involves a financial institution that revamped its reporting processes. By designing a robust data warehouse, they streamlined their compliance reporting, improving accuracy and timeliness. Such examples serve as powerful confirmation of the theoretical concepts discussed, making it easier for learners to envision how these techniques can be applied in their own work.

Moreover, lectures often delve into the specifics of implementing various tools and technologies within data warehousing. For example, mentioning the integration of ETL processes, some speakers provide insights into software like Talend or Informatica that have made data extraction and transformation more intuitive.

To deepen your understanding, explore additional resources on Expert Guest Lectures on Data Engineering & AI Trends. These sessions are designed to equip you with actionable knowledge and help you navigate the evolving landscape of data warehousing more effectively.

Photo by Pavel Danilyuk

Optimizing Data Warehouse Performance

Optimizing data warehouse performance is essential to ensure that organizations can handle large volumes of data efficiently. With the rapid growth of data, performance issues are bound to arise, but there are practical strategies to address these challenges. Two primary strategies focus on automation and monitoring, which not only help in performance management but also scale the data warehouse effectively.

Automation in Data Warehousing

Automation plays a pivotal role in managing data warehouses effectively. By streamlining regular maintenance tasks, organizations can save time and minimize human error. Utilizing automation tools and techniques helps in re-tasking resources so they can focus on more complex data-related issues.

One of the main benefits of automation is improved data integration. Instead of manual processing, automated ETL (Extract, Transform, Load) systems can handle data ingestion swiftly. These systems can run at scheduled intervals or in real-time, ensuring your data is always fresh and readily available for analysis. For those looking to enhance their understanding or get started with automation tools, consider exploring Data Warehousing 101: ETL, Schema Design & Scaling. It provides a foundational overview of these processes and their significance in maintaining an efficient data warehouse.

Another core aspect of automation is monitoring and alerting. Setting up automated monitoring tools can track system performance metrics and user queries, offering insights on any bottlenecks or performance hiccups. By proactively addressing these issues, organizations can maintain optimal performance without constant oversight.

Monitoring and Scaling

Monitoring is critical for ensuring that your data warehouse remains responsive to growing data needs. Regular checks on system performance can identify slow queries, server health, and storage utilization. When these metrics are monitored continuously, organizations can make informed decisions about optimizing resources.

Scaling is another integral part of maintaining high performance in a data warehouse. As your data grows, so must your infrastructure. Knowing when to scale up (adding more powerful servers) or scale out (adding more machines) can be the difference between seamless analysis and sluggish performance. Partitions and indexes can greatly aid in performance as they expedite data retrieval. For further insights into these scaling techniques, check out Advanced Data Modeling: Best Practices and Real-World Success Stories.

A robust scaling strategy encourages organizations to assess their future data needs continually. Regular evaluations allow engineers to stay ahead of potential growth by estimating necessary changes to the architecture before issues arise.

By effectively implementing automation, proactive monitoring, and scalable solutions, you can drastically enhance the performance of your data warehouse. Not only does this lay a foundation for efficient data management, but it positions your organization to adapt quickly to evolving analytical demands.

Conclusion

Understanding data warehousing is essential for any data engineer looking to excel in the field. The insights gained from expert guest lectures can significantly impact your approach to designing and optimizing these systems.

Key takeaways include the importance of proper schema design and the growing trends of automation and AI integration. As market needs evolve, staying updated will empower you to enhance data management strategies effectively.

To dive deeper into these concepts and refine your skills, be sure to explore resources like Snowflake Integration: Complete Guide and Data Engineering Best Practices.

What new techniques will you incorporate in your data warehousing practices after learning from these expert insights?

Real stories of student success

Student TRIPLES Salary with Data Engineer Academy

DEA Testimonial – A Client’s Success Story at Data Engineer Academy

Frequently asked questions

Haven’t found what you’re looking for? Contact us at [email protected] — we’re here to help.

What is the Data Engineering Academy?

Data Engineering Academy is created by FAANG data engineers with decades of experience in hiring, managing, and training data engineers at FAANG companies. We know that it can be overwhelming to follow advice from reddit, google, or online certificates, so we’ve condensed everything that you need to learn data engineering while ALSO studying for the DE interview.

What is the curriculum like?

We understand technology is always changing, so learning the fundamentals is the way to go. You will have many interview questions in SQL, Python Algo and Python Dataframes (Pandas). From there, you will also have real life Data modeling and System Design questions. Finally, you will have real world AWS projects where you will get exposure to 30+ tools that are relevant to today’s industry. See here for further details on curriculum

How is DE Academy different from other courses?

DE Academy is not a traditional course, but rather emphasizes practical, hands-on learning experiences. The curriculum of DE Academy is developed in collaboration with industry experts and professionals. We know how to start your data engineering journey while ALSO studying for the job interview. We know it’s best to learn from real world projects that take weeks to complete instead of spending years with masters, certificates, etc.

Do you offer any 1-1 help?

Yes, we provide personal guidance, resume review, negotiation help and much more to go along with your data engineering training to get you to your next goal. If interested, reach out to [email protected]

Does Data Engineering Academy offer certification upon completion?

Yes! But only for our private clients and not for the digital package as our certificate holds value when companies see it on your resume.

What is the best way to learn data engineering?

The best way is to learn from the best data engineering courses while also studying for the data engineer interview.

Is it hard to become a data engineer?

Any transition in life has its challenges, but taking a data engineer online course is easier with the proper guidance from our FAANG coaches.

What are the job prospects for data engineers?

The data engineer job role is growing rapidly, as can be seen by google trends, with an entry level data engineer earning well over the 6-figure mark.

What are some common data engineer interview questions?

SQL and data modeling are the most common, but learning how to ace the SQL portion of the data engineer interview is just as important as learning SQL itself.