How to Build an Automated Data Extraction Pipeline from APIs

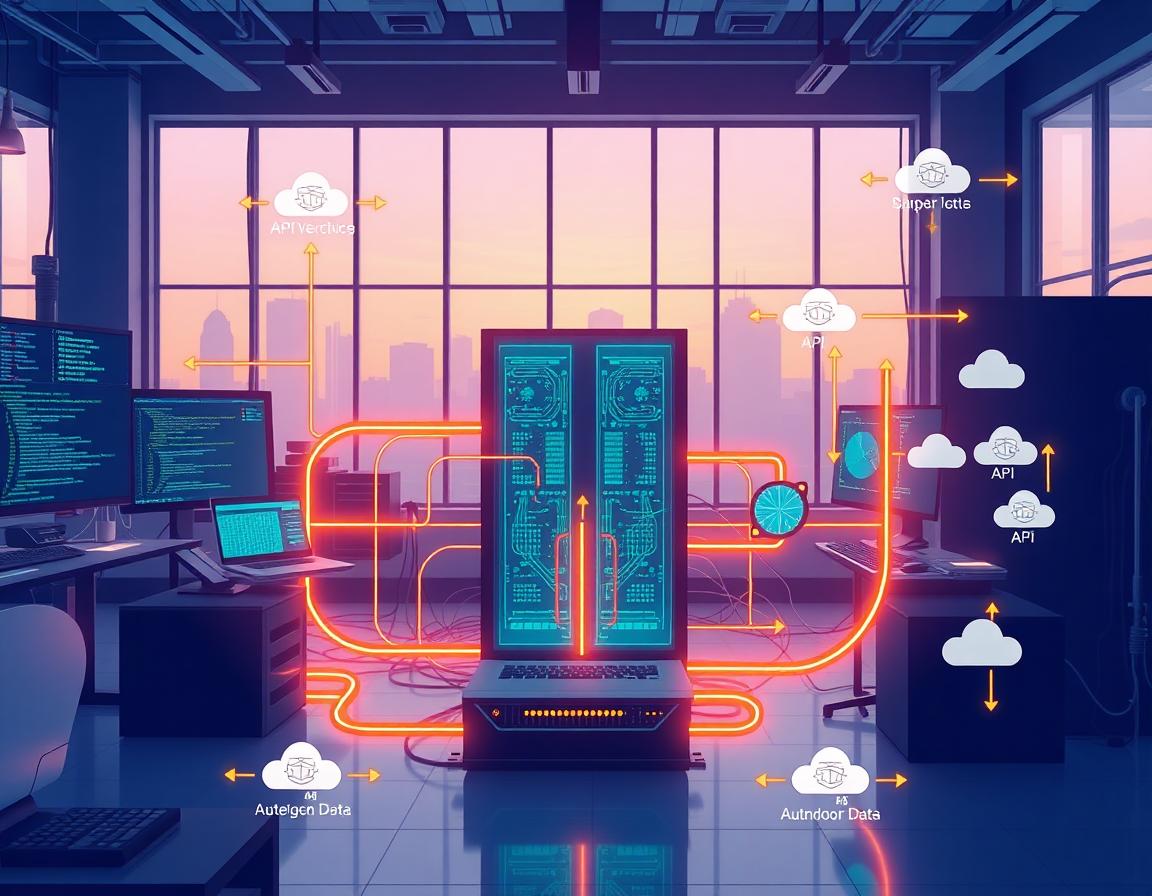

In today’s data-driven world, automated data extraction pipelines are essential for efficient and timely data analysis. They simplify the process of gathering data from various sources, especially APIs, which serve as crucial gateways to diverse datasets.

This post will guide you through building your own automated data extraction pipeline from APIs. You’ll learn the step-by-step process, common challenges, and best practices that will set you up for success. Whether you’re a seasoned data engineer or making a career shift into this field, mastering this skill can add significant value to your toolkit.

Getting started might feel daunting, but with the right training, like the personalized sessions offered by Data Engineer Academy, you can ease into the complexities of data workflows. Plus, for more insights and practical tips, check out the Data Engineer Academy YouTube channel. Let’s jump right into the essentials of pipeline creation, so you can harness the power of automation in your projects.

Understanding the Basics of Data Extraction from APIs

Data extraction is at the heart of building efficient automated pipelines. When you hear “data extraction,” think of it as retrieving valuable information from various sources – and APIs (Application Programming Interfaces) play a significant role here. They act as the bridges between you and the vast seas of data available online. Understanding how to harness these APIs can set you on the right path to building the automation tools that will power your data-driven projects.

What is Data Extraction?

Data extraction is the process of collecting data from different sources, such as databases, websites, and APIs. It involves gathering unprocessed data and preparing it for subsequent handling, analysis, or storage. This is essential because raw data alone doesn’t provide valuable insights. It needs to be transformed into meaningful information. APIs serve as crucial gateways for this process, allowing you to programmatically access and manipulate data. By using APIs effectively, you can automate your data extraction tasks, significantly saving time and reducing the potential for errors. To simplify your understanding of this concept, you might want to check out our ETL Data Extraction Explained in Just 5 Minutes.

Types of APIs

When it comes to data extraction, not all APIs are created equal. Here’s a quick overview of three main types:

- REST (Representational State Transfer): This API type uses standard HTTP methods, making it easy to retrieve or manipulate resources. REST is widely used for its simplicity and speed, especially when fetching data in JSON format.

- SOAP (Simple Object Access Protocol): SOAP is more rigid, requiring a fixed message structure. It’s highly secure, making it suitable for applications needing reliability and protocol compliance, such as financial services.

- GraphQL: A more recent approach allows you to request only the data you need. This efficiency can significantly reduce the amount of data transmitted over the network, enhancing performance.

Understanding these API types is essential because they dictate how you will structure your requests and handle responses. This knowledge will guide you in selecting the most appropriate API for your specific data extraction needs.

Common Use Cases for Data Extraction

Data extraction isn’t just a technical task; it has real-world applications that can empower various business functions:

- Data Analysis: By extracting extensive datasets, analysts can uncover trends, build predictive models, and gain insights that drive business strategies.

- Reporting: Extracted data can be compiled into reports, dashboards, or visualizations, making it accessible to stakeholders for informed decision-making.

- Integration: Data extracted from APIs can be integrated into databases or other applications, facilitating a smoother flow of information across platforms.

- Machine Learning: For machine learning projects, datasets need to be extracted, cleaned, and manipulated to train algorithms effectively.

Utilizing API data extraction effectively in these scenarios not only enhances operational efficiency but also improves data-driven decision-making across your organization.

As you explore these concepts, remember that learning from the experts can accelerate your understanding. Consider personalized training from Data Engineer Academy to delve deeper into building your data extraction pipelines. Plus, don’t forget to check out the informative content available on our YouTube channel.

Setting Up Your Environment

Creating an efficient automation pipeline for data extraction from APIs begins with setting up the right environment. This crucial step lays the groundwork for the tools and technologies you’ll need to interact with APIs effectively. Let’s explore the essential sub-sections for your environment setup.

Choosing the Right Tools

When it comes to programming languages for API interaction, you have multiple options. Python stands out, thanks to its simplicity and powerful libraries. Libraries like Requests make it easy to send HTTP requests and handle responses. Other notable languages include Java, which offers libraries like Axios, known for making API calls uncomplicated and efficient. Here are some popular options to consider:

- Python: Known for its readability and rich ecosystem. Ideal libraries include Requests and Flask.

- JavaScript: With libraries like Axios, it allows for smooth integration in web applications.

- Java: Offers strong support for building robust backend systems, also capable with libraries like OkHttp.

- Ruby: Provides options like RestClient, which is straightforward and effective for making API requests.

By choosing the right tools, you position yourself for success in building a reliable data extraction pipeline.

Development Environment Setup

Setting up your local development environment is next. You’ll want to consider using virtual environments or containers to manage dependencies and maintain project isolation. Here’s a quick guide to get you started:

- Install Python/Node.js: Choose your preferred language and install the necessary runtime (Python, Node.js, etc.).

- Create a Virtual Environment:

- For Python, simply run:

python -m venv myenvThis creates a folder with all dependencies separate from your global installation. - For Node.js, consider using Docker to create containers that house all your project dependencies, ensuring a consistent environment across different setups.

- For Python, simply run:

- Activate Your Environment:

- For Python, run:

source myenv/bin/activate

- For Python, run:

- Set Up Version Control: Use Git for version control to track changes and collaborate more effectively, ensuring your setup is as organized as your code.

For practical applications, you can check out the 5 Real World Free Projects You Can Start Today to see how this setup translates into actual projects.

Authentication and Security Best Practices

Accessing APIs can expose your applications to risks, making security paramount. Here are some best practices to securely interact with APIs:

- Use API Tokens: Tokens validate API calls. Keep them confidential and store them securely, like in environment variables.

- Implement HTTPS: Always use HTTPS to encrypt data in transit, protecting it from interception.

- Rate Limiting: Most APIs have usage limits. Respect these boundaries to avoid service interruptions.

- Monitor and Log Activity: Keep track of API usage patterns to detect any unusual activity or potential breaches.

- Periodic Token Rotation: Regularly change access tokens to mitigate risks.

For comprehensive strategies on securing data pipelines, refer to How to Secure Data Pipelines in the Cloud.

By setting up the right tools and ensuring security, you’ll pave the way for building an automated data extraction pipeline that is both efficient and secure, preparing you for the challenges ahead in your data engineering journey. If you’re looking for more granular guidance, consider personalized training sessions at Data Engineer Academy, or explore our resources on the Data Engineer Academy YouTube channel.

Building the Automated Data Extraction Pipeline

Creating an automated data extraction pipeline from APIs involves several key steps. From designing your workflow to implementing extraction logic, each component plays a vital role. In this section, we will explore how to design the workflow, implement data extraction logic, handle data cleaning and transformation, and set up automation. This approach will give you a solid foundation for your automated pipeline.

Designing the Workflow

A well-structured workflow is at the heart of your automated data extraction pipeline. This usually includes three main stages: data extraction, transformation, and loading (ETL). Here’s a closer look at each component:

- Data Extraction: This is the first step in the process. You connect to APIs using HTTP requests to fetch the data you need. The efficiency of this phase is critical as it sets the pace for the entire operation.

- Data Transformation: After fetching the data, it often requires cleansing and restructuring. This may involve filtering unnecessary fields, changing data formats, or aggregating data to suit your needs better.

- Loading: Finally, the transformed data is loaded into a database or data warehouse where it can be accessed by analytics tools for reporting and insights.

A powerful workflow ensures smooth transitions through these stages—that’s where tools like Apache Airflow or custom scripts can be indispensable. For instance, you might automate the execution of your incremental data loading pipelines and define schedules that align with your business requirements. For deeper insights, explore Data Engineering: Incremental Data Loading Strategies.

Implementing Data Extraction Logic

Fetching data from APIs is straightforward, especially with programming languages like Python. Here’s a simple snippet to illustrate how you might retrieve data:

import requests

url = "https://api.example.com/data"

response = requests.get(url)

if response.status_code == 200:

data = response.json()

print(data)

else:

print("Error: Unable to fetch data")

This example pulls JSON data from an API endpoint. You’ll want to customize it based on your specific needs, including headers for authentication or query parameters. For more complex requirements, consider using frameworks like Flask or Django to build robust API consumers. Familiarize yourself with the steps in Building Data Pipelines: A Step-by-Step Guide 2024 to get more practical insights.

Handling Data Cleaning and Transformation

Once the data is extracted, cleaning and transformation are essential to make it usable. Here are some techniques you might consider:

- Remove Duplicates: Ensure that your dataset is free from duplicate records, which can skew analysis.

- Format Correction: Check for inconsistencies in data formats, such as date formats or numerical precision.

- Value Mapping: Sometimes categories need renaming for clarity. If you have customer ratings, for example, mapping numerical values to descriptive labels can vastly improve interpretability.

You can utilize libraries like Pandas in Python to streamline this process, as it comes with built-in functions for data cleaning and manipulation. For a comprehensive approach to automation and efficiency, align this phase with insights from Automating ETL with AI.

Scheduling and Automating Data Extraction

Automation is the backbone of an efficient extraction pipeline. Tools like cron jobs or orchestration frameworks can help.

- Cron Jobs: These run tasks at scheduled intervals. You could set up a job to run your data extraction script daily, weekly, or at any preferred time.

- Orchestration Tools: Advanced users might opt for tools like Airflow, which allow you to manage workflows and dependencies within your extraction processes more effectively. These tools also come with monitoring capabilities that alert you in case something goes wrong, ensuring your pipelines run smoothly.

Getting started with task scheduling can significantly streamline your workflows. For example, consider defining schedules that automate execution, like in the Data Orchestration process.

With these steps and insights, you’ll be well on your way to building a powerful automated data extraction pipeline from APIs. If you’re looking for personalized support or further training, consider checking out the personalized sessions offered by Data Engineer Academy. Also, look at the valuable insights on our YouTube channel to complement your learning journey.

Testing and Monitoring Your Pipeline

Building an automated data extraction pipeline isn’t just about piping data from API to destination; it’s about ensuring that the data flowing through is accurate and that your pipeline operates seamlessly over time. Let’s explore how you can implement robust testing and monitoring strategies.

Unit Testing Your Extraction Logic

To effectively test API calls and ensure data integrity, start by creating unit tests for your extraction logic. Unit tests help catch errors before they make it to production, giving you an early warning system for potential issues. Here’s a structured approach:

- Mocking API Responses: Use libraries like

unittest.mockin Python to simulate API responses. This ensures you can test how your extraction logic reacts to both expected and unexpected data. - Validating Response Structure: Confirm that the data returned from the API matches the expected structure. Write tests to ensure required fields are present and formatted correctly.

- Integration Tests: Beyond unit tests, employ integration tests to see how the extraction functions work within the broader system. This involves calling the actual API and ensuring the returned data integrates smoothly with subsequent pipeline stages.

- Continuous Integration (CI): Implement CI tools like Jenkins or GitHub Actions to run your tests automatically every time changes are made. This keeps your pipeline robust through updates.

Regularly unit testing your extraction logic improves the reliability of your data integration process. For detailed guidance on avoiding mistakes during development, check out our post on Top Data Engineering Mistakes and How to Prevent Them.

Monitoring Extracted Data Quality

It’s crucial to monitor the quality of the data you extract to avoid downstream errors. Here are some practical methods to ensure your data meets quality standards:

- Data Validation Rules: Implement rules that check for data accuracy, completeness, and consistency. For instance, if you’re pulling customer records, ensure email fields are properly formatted.

- Anomaly Detection: Set up scripts to identify patterns or anomalies—like sudden spikes in data volume—that could indicate problems with your API or changes in data behavior.

- Logging and Alerts: Use logging tools to capture data pipeline activity and errors. Implement alerts to notify your team whenever there are issues or when certain thresholds are breached.

- Dashboards for Monitoring: Create visual dashboards that provide real-time insights into your data quality metrics and pipeline health. Tools like Grafana can be instrumental in bringing this data to life.

The goal is to keep your extracted data fresh and high-quality, which is vital for effective decision-making. For further insights into maintaining data quality, consider reading How Data Modeling Ensures Data Quality and Consistency.

Troubleshooting Common Issues

Even the best pipelines encounter issues. Being prepared to troubleshoot effectively can save you significant time and stress. Here are some common pitfalls and how to either avoid or resolve them:

- API Changes: APIs can change without notice. Track your API documentation and set up notifications for any updates to be proactive about breaking changes.

- Network Issues: Intermittent network problems can lead to failed API calls. Implement retry logic to handle temporary failures. A backoff strategy can help reduce the load on APIs during outages.

- Data Drift: Changes in the data being returned—such as new fields or different data types—can break your extraction logic. Regularly review your extracted data and adjust your tests to account for these changes.

- Rate Limiting: Many APIs impose rate limits to prevent abuse. Design your pipelines to respect these limits and use techniques like batching requests to stay within allowable ranges.

For more practical strategies to troubleshoot data engineering issues, look at our guide on Solve Real Data Engineering Challenges from Reddit.

By implementing thorough testing and monitoring processes, along with solid troubleshooting tactics, you’ll enhance the reliability of your automated data extraction pipeline and maintain high data quality. If you’re looking to improve your skill set even further, consider personalized training offered by Data Engineer Academy, and don’t forget to check out our YouTube channel for more insights!

Upskilling with Data Engineer Academy

In the fast-evolving world of data engineering, continuous skill enhancement is key to staying relevant and effective. As you think about building an automated data extraction pipeline from APIs, upskilling becomes not just an option but a necessity. Fortunately, Data Engineer Academy provides the resources and training you need to navigate this journey with confidence.

Why Consider Training?

Professional training in data engineering offers numerous benefits that can significantly enhance your skills and career prospects. Here’s why investing in training is an important step:

- Structured Learning: Training programs offer a well-organized curriculum that covers essential concepts and tools. This structured approach helps you grasp complex topics more easily than self-study.

- Expert Guidance: Learning from professionals who have hands-on experience in the field means you get insights that you won’t find in books or online articles. They can guide you through real-world challenges and provide context around your learning.

- Hands-On Projects: Many training courses incorporate project-based learning, allowing you to apply what you’ve learned in a practical setting. This real experience is invaluable for your confidence and competencies.

- Networking Opportunities: Connect with fellow learners and industry experts during your training. Building a network can lead to mentorship opportunities, job referrals, and collaborative projects.

- Staying Updated: The data engineering landscape is constantly changing. Professional training ensures that you stay informed about the latest tools, techniques, and best practices. For instance, courses on Snowflake for Beginners can provide you with foundational knowledge that’s increasingly in demand.

When you consider these benefits, it becomes clear that investing in your education is not just about gaining knowledge—it’s about carving a path to success in your data engineering career.

Available Courses and Resources

At Data Engineer Academy, a variety of courses and resources are tailored to help you develop the necessary skills for shaping your future in data engineering. Here’s a look at what’s available:

- Comprehensive Courses: Courses cover fundamental to advanced topics, including SQL, Azure, and system design. For instance, the SQL Tutorial (FREE) empowers you to craft intricate queries and manage databases effectively.

- Focused Training on Interviews: Preparing for job interviews is crucial. The SQL Data Engineer Interview course focuses on strategies and knowledge that can dramatically improve your performance in technical interviews.

- Certifications: To validate your skills, explore the Top Data Engineering Certifications for 2025. These programs offer recognized credentials that can enhance your resume.

- End-to-End Projects: The DE End-to-End Projects course lets you apply what you’ve learned in a realistic environment, preparing you for actual workplace scenarios.

- Supportive Community: Joining the Data Engineer Academy means you are part of a vibrant learning community. Participating in forums and discussions can provide further insights and support as you refine your skills.

- YouTube Resources: For ongoing learning and tips, don’t forget to check out the Data Engineer Academy YouTube channel for a variety of tutorials and insights.

Upskilling through Data Engineer Academy not only equips you with technical know-how but also empowers you to tackle real-world challenges in your data projects. Whether you’re new to the field or a seasoned professional looking to sharpen your skills, the resources offered here are invaluable. Remember, investing in your skills today is the first step toward a successful tomorrow!

Conclusion

Building an automated data extraction pipeline from APIs involves multiple key steps. Start by understanding how APIs work and selecting the right tools for extraction and transformation. Properly setting up your environment and implementing robust testing and monitoring practices ensures your pipeline operates smoothly and delivers high-quality data.

Don’t overlook the importance of continuous learning in this fast-changing field. Consider seeking personalized training from Data Engineer Academy to deepen your skills and expand your knowledge. There’s also a wealth of practical insights waiting for you on the Data Engineer Academy YouTube channel.

What aspects of automated data extraction are you most excited to explore further? Your journey into data engineering can start today—embrace the challenges and opportunities that lie ahead!

Real stories of student success

Student TRIPLES Salary with Data Engineer Academy

DEA Testimonial – A Client’s Success Story at Data Engineer Academy

Frequently asked questions

Haven’t found what you’re looking for? Contact us at [email protected] — we’re here to help.

What is the Data Engineering Academy?

Data Engineering Academy is created by FAANG data engineers with decades of experience in hiring, managing, and training data engineers at FAANG companies. We know that it can be overwhelming to follow advice from reddit, google, or online certificates, so we’ve condensed everything that you need to learn data engineering while ALSO studying for the DE interview.

What is the curriculum like?

We understand technology is always changing, so learning the fundamentals is the way to go. You will have many interview questions in SQL, Python Algo and Python Dataframes (Pandas). From there, you will also have real life Data modeling and System Design questions. Finally, you will have real world AWS projects where you will get exposure to 30+ tools that are relevant to today’s industry. See here for further details on curriculum

How is DE Academy different from other courses?

DE Academy is not a traditional course, but rather emphasizes practical, hands-on learning experiences. The curriculum of DE Academy is developed in collaboration with industry experts and professionals. We know how to start your data engineering journey while ALSO studying for the job interview. We know it’s best to learn from real world projects that take weeks to complete instead of spending years with masters, certificates, etc.

Do you offer any 1-1 help?

Yes, we provide personal guidance, resume review, negotiation help and much more to go along with your data engineering training to get you to your next goal. If interested, reach out to [email protected]

Does Data Engineering Academy offer certification upon completion?

Yes! But only for our private clients and not for the digital package as our certificate holds value when companies see it on your resume.

What is the best way to learn data engineering?

The best way is to learn from the best data engineering courses while also studying for the data engineer interview.

Is it hard to become a data engineer?

Any transition in life has its challenges, but taking a data engineer online course is easier with the proper guidance from our FAANG coaches.

What are the job prospects for data engineers?

The data engineer job role is growing rapidly, as can be seen by google trends, with an entry level data engineer earning well over the 6-figure mark.

What are some common data engineer interview questions?

SQL and data modeling are the most common, but learning how to ace the SQL portion of the data engineer interview is just as important as learning SQL itself.